## Compute and plot a PCA using geomorph:

pca <- geomorph::gm.prcomp(

A = gpa$coords,

scale = FALSE

)

plot(pca)

{geomorph}.

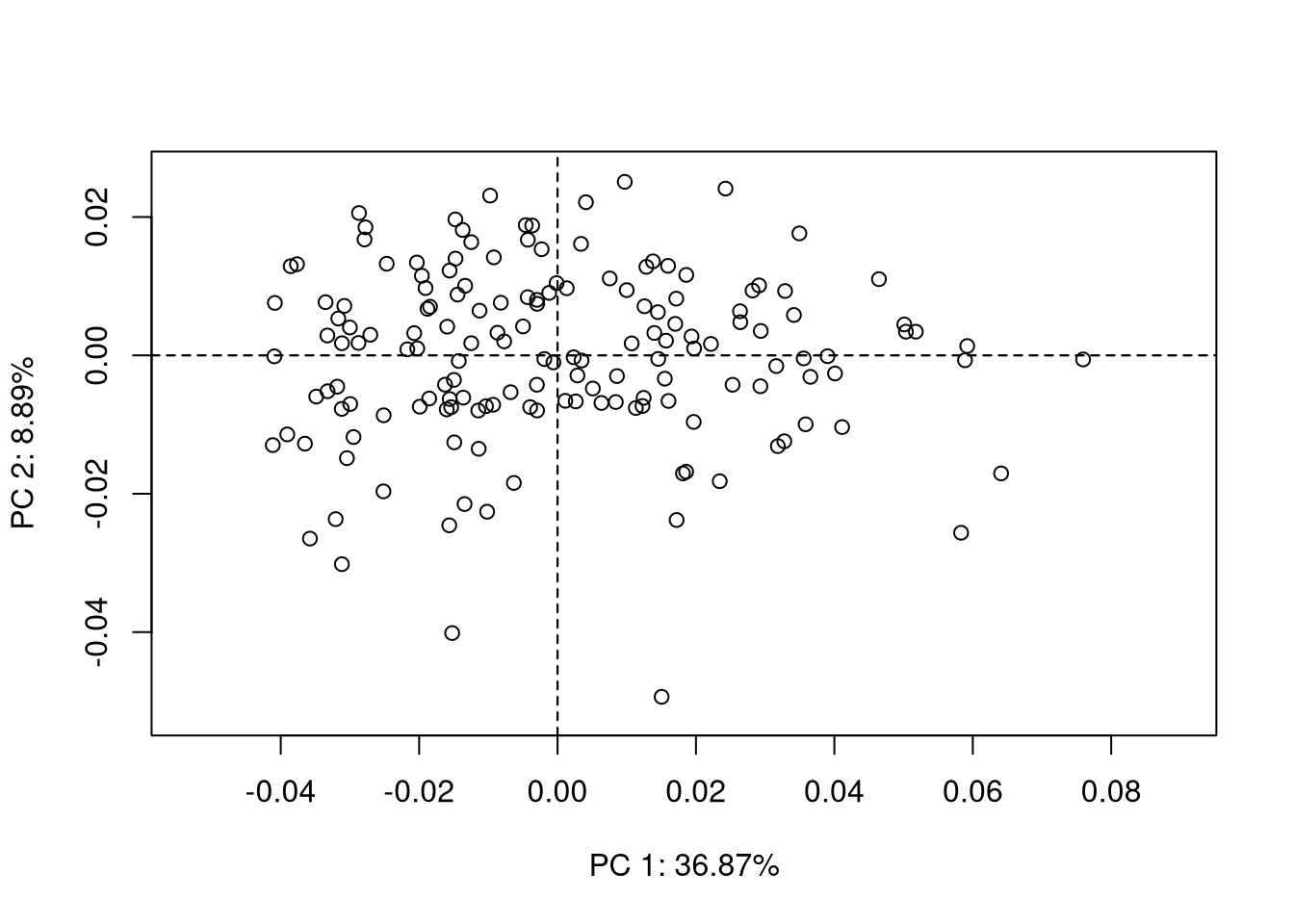

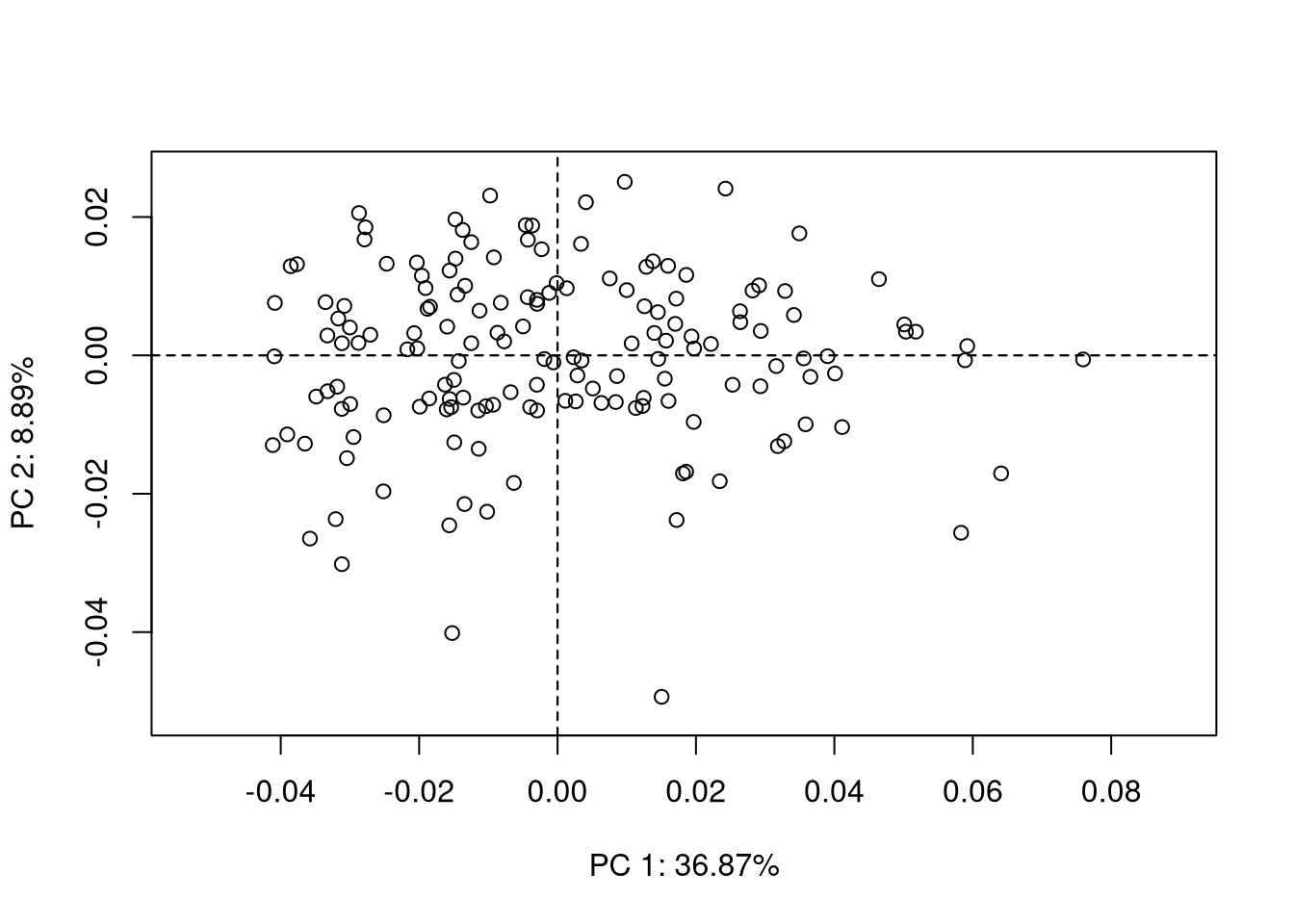

Once the landmark coordinates are aligned using procrustes superimposition, the next step is usually to perform a principal component analysis on procrustes coordinates. We suppose here that you are already roughly familiar with PCA. If not, there are many excellent references out there on this topic (Escofier and Pagès 2016; Jolliffe 2002; Saporta 2011).

In R, if the Procrustes analysis was previously done using the package {geomorph}, performing a PCA on Procrustes coordinates is straightforward:

## Compute and plot a PCA using geomorph:

pca <- geomorph::gm.prcomp(

A = gpa$coords,

scale = FALSE

)

plot(pca)

{geomorph}.

The PCA plots produced using {geomorph} are moderately appealing, but have an interesting feature: they allow you to select one point on the plot and visualize interactively the corresponding shape. This is the role of the function picknplot.shape(), that you can try using the following code:

## Picking a point on the PCA plot:

picknplot.shape(plot(pca))Note that you can save as PNG files the shapes that you pick on the plot.

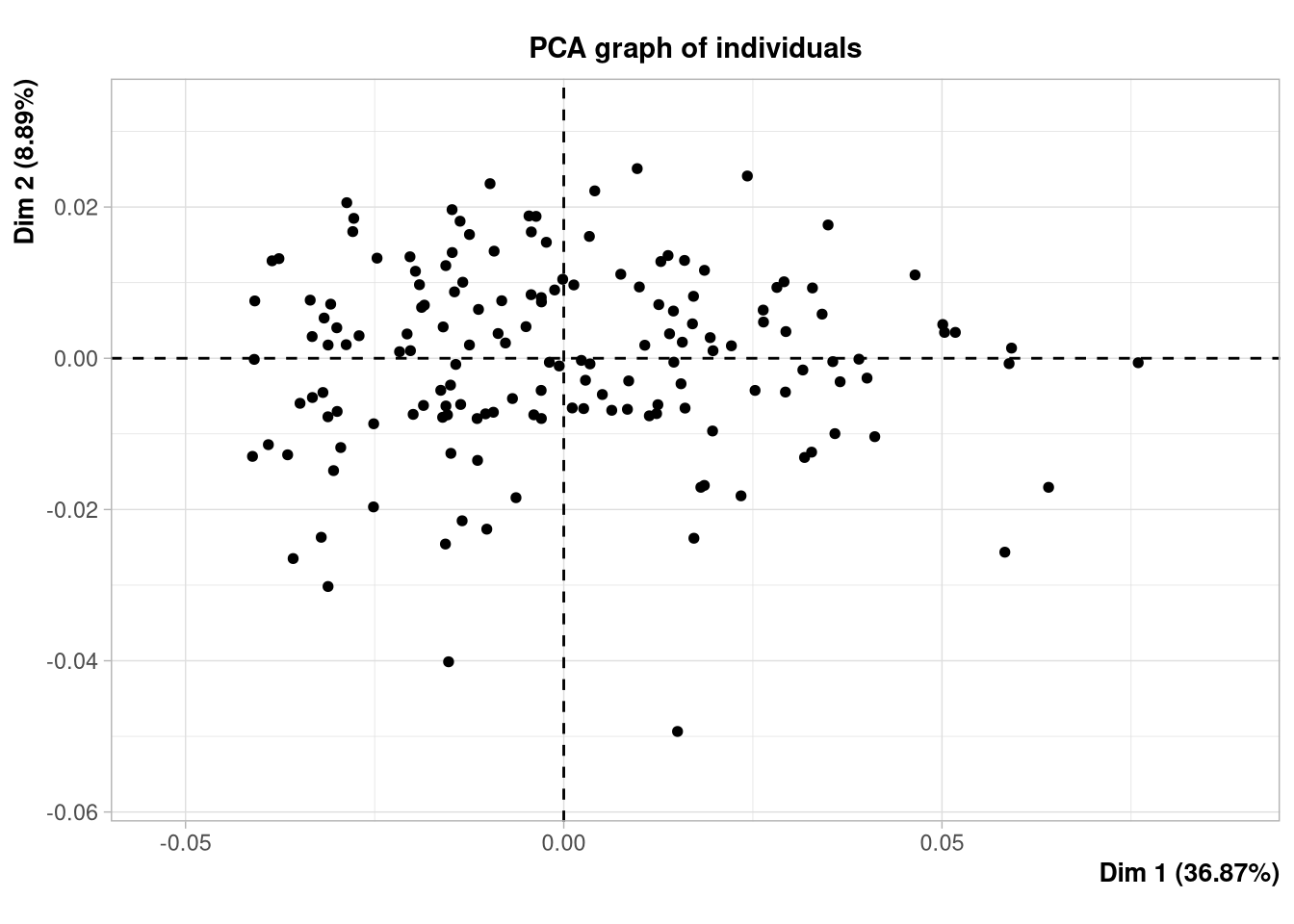

However, if you don’t need the interactive function, you might want more fancy plots, or simply want to get rid of {geomorph} for subsequent statistical analyses. For that, a first step would be to convert your 3D-array \(A = (a_{ijk})\) into a 2D-dataframe, with one row per individual, and all landmarks coordinates in columns. This can be done easily with:

## Reshape the array as a dataframe:

dtf <- two.d.array(gpa$coord)

dim(dtf)[1] 159 206Let’s visualize the first rows and columns of this dataframe:

## Display the first 5 individuals, and the first 3 landmarks:

print(dtf[1:5, 1:6]) 1.X 1.Y 2.X 2.Y 3.X 3.Y

M-180 -0.1421087 0.03522576 -0.1033258 0.01285838 -0.07483114 0.02755803

M-181 -0.1477757 0.03593403 -0.1009377 0.01105930 -0.07654347 0.02788646

M-182 -0.1455329 0.03649664 -0.1059314 0.01253985 -0.07322652 0.02778743

M-183 -0.1462273 0.03528867 -0.1085387 0.01100597 -0.07526635 0.02707705

M-184 -0.1473910 0.03726782 -0.1053168 0.01153009 -0.07220594 0.02792505The principal component analysis can now be performed on this dataframe, using more appealing functions, here coming from the R package {FactoMineR}:

## An alternative PCA plot with FactoMineR:

pca.facto <- FactoMineR::PCA(

X = dtf,

scale.unit = FALSE,

graph = FALSE

)

plot(pca.facto, label = "none")

{FactoMineR}.

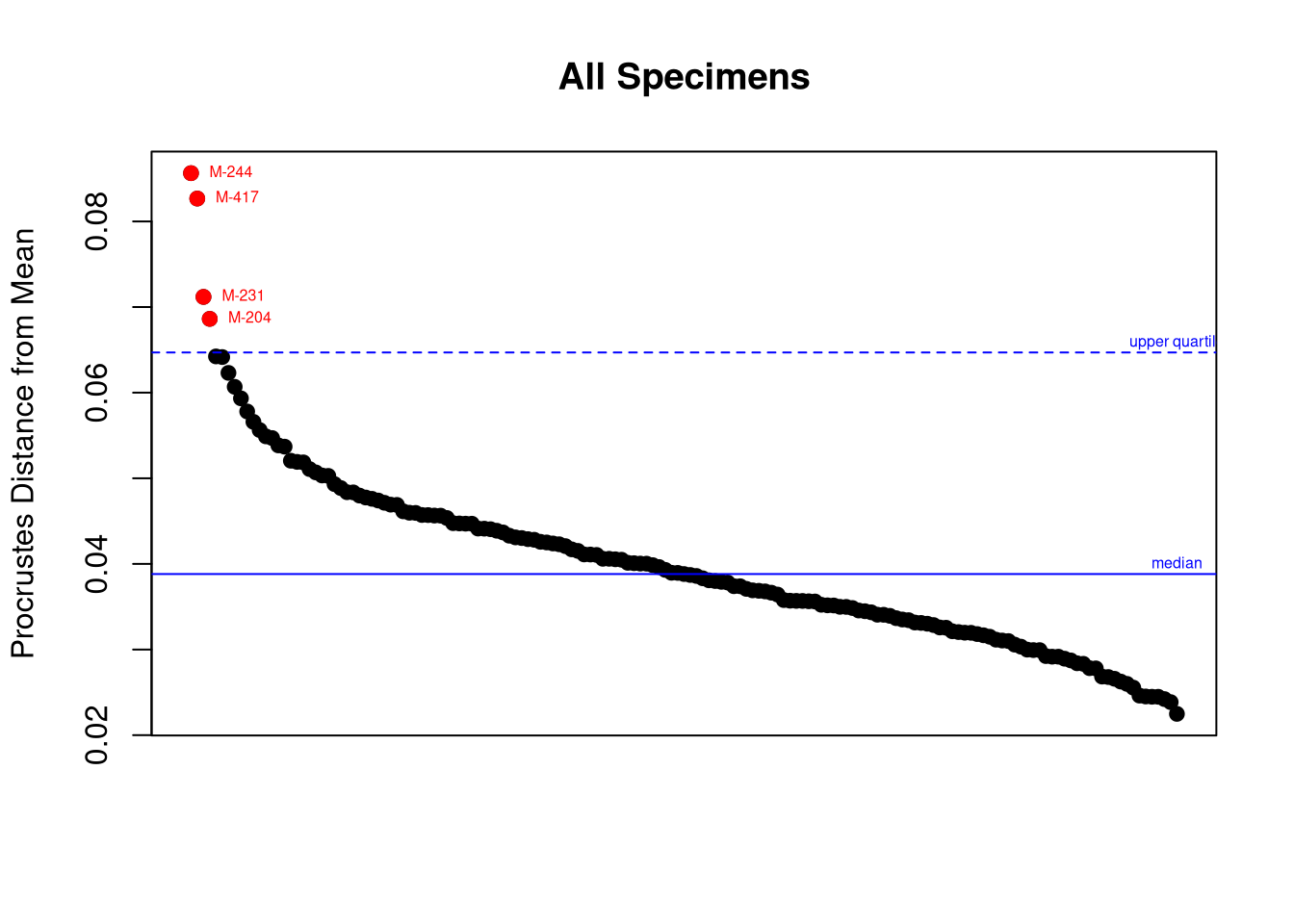

Strong outliers (i.e., individuals with landmarks configurations that are very different from what is usually observed in the rest of the sample) can be spotted on the PCA plot. However, there are several drawbacks in this simple approach:

Thus, we can identify outliers by applying Tukey’s rule2 (Tukey 1977) on the univariate series of procrustes distances from each individual to the mean shape. (Note that this is a way of turning a multivariate problem into an univariate problem.)

plotOutliers(gpa$coords)

Once these individuals are identified, we should go back to their specific model and landmark coordinates to see where was the mistake (if any). This is the role of the argument inspect.outliers = TRUE below:

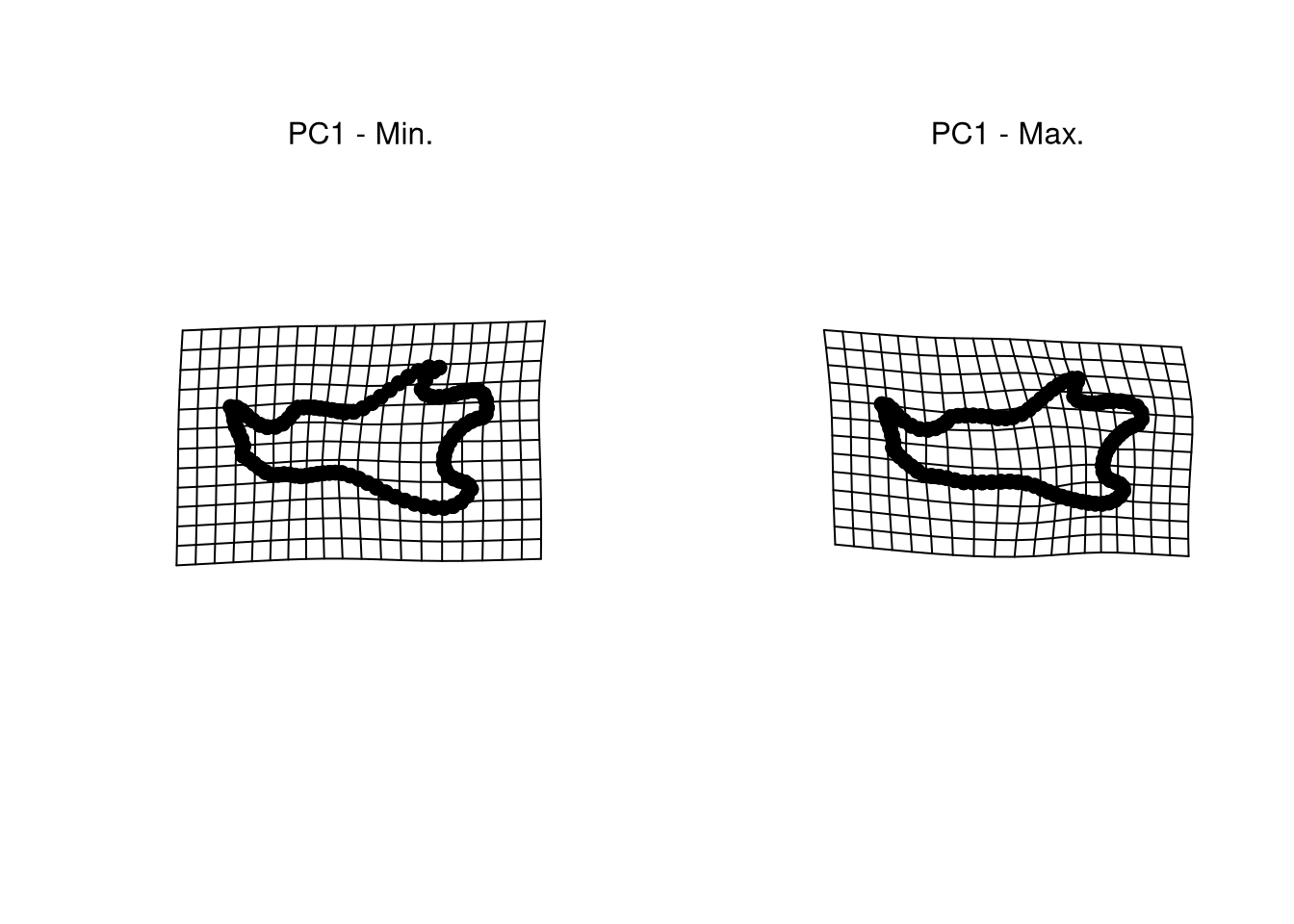

plotOutliers(gpa$coords , inspect.outliers = TRUE)Interpreting PCA plots like Figure 4.2 is very difficult if you don’t know the meaning of each principal axis. The best way for that consists in visualizing the extreme shapes corresponding to the left and right bounds of each axis. This requires to predict the shape at these precise locations.

## Mean shape:

M <- mshape(gpa$coords)

## Predict extreme shapes along PC1:

PC <- pca$x[, 1]

preds <- shape.predictor(

gpa$coords,

x = PC,

Intercept = FALSE,

pred1 = min(PC),

pred2 = max(PC)

) # PC 1 extremes

## Plot extreme shapes:

par(mfrow = c(1, 2))

plotRefToTarget(M, preds$pred1)

mtext("PC1 - Min.")

plotRefToTarget(M, preds$pred2)

mtext("PC1 - Max.")

This is not a trivial question at all. Many methods do exists to identify univariate and multivariate outliers (Santos 2020), but there is still some degree of subjectivity in deciding what exactly an outlier is.↩︎

This is the most common rule for defining univariate outliers, and the rule used to display outliers on boxplots by all statistical software. See also help(boxplot.stats) in R for more details.↩︎